Compliance operates in a world of regulatory gray areas where context matters as much as data, and AI systems struggle with the nuances that human professionals navigate daily. Roman Eloshvili of ComplyControl, argues that while artificial intelligence can handle the heavy lifting of data processing and anomaly detection, the final judgment calls require human insight, emotional intelligence and moral reasoning that machines simply cannot replicate — at least not yet.

There is no doubt that AI is indeed a powerful tool, but is it truly more effective than human oversight when it comes to compliance? To my mind, the short answer is: not yet. And perhaps, not ever — not fully, at least.

The world of compliance is messy. Things are rarely black and white here. Regulations often lack clarity and differ between jurisdictions, which means there is never a shortage of cases that fall into gray areas. AI, for all its speed and efficiency, still lacks the ability to clearly distinguish the nuances and context of various situations. Humans continue to hold the upper hand in that regard.

Imagine a situation where a compliance officer faces a case where a person’s actions do not align with well-known guidelines but also don’t violate any explicit rules. AI might flag their behavior as odd, but it definitely won’t understand the subtleties behind it. Detecting anomalies in data is not the same as interpreting social cues or other background factors that might be at play.

Even if AI does raise a red flag, it would not be able to offer any true insight into whether the situation actually signals a threat or simply a misunderstanding. A seasoned human specialist, on the other hand, can draw on years of experience and emotional intelligence to interpret what’s really going. That makes them more flexible in decision-making since they often can see the full picture in ways AI cannot.

A good example here is the 2008 financial crisis. It was developing so rapidly that no algorithm, no matter how advanced at that time, could handle it, let alone predict or respond to its cascading effects. As a result, regulators had to make decisions based not on data models but on systemic risk assessments and gut instinct. When the US Federal Reserve stepped in to rescue AIG while allowing Lehman Brothers to collapse it wasn’t following a model — it was strategic human intervention amid general chaos. It was all about intuition, experience and adaptability.

Of course, machines today are much smarter, but in moments of crisis, human traits — like the ability to read between the lines and face ambiguous situations — still move to the forefront. When the inputs are stable and the rules are clear, an AI model can work just fine, but in compliance such conditions are rare.

Constantly changing regulation tends to bring a lot of uncertainty, and companies often must make judgment calls in the absence of legal clarity. Artificial intelligence simply isn’t ready for that kind of high-stakes, morally complex decision-making. At least, not in its current development stage.

It is also important to mention the issue of bias: AI systems are only as good as the data they are trained on. If the data provided is incomplete or skewed, AI’s conclusions will involve the same biases without even questioning it. And when it comes to compliance, there is always a risk that historical data may include regional differences and standards that have been outdated for a long while.

If an AI inherits this flawed information, it can end up making unfair or even dangerous decisions. And without human intervention, those decisions will remain invisible and go unchecked, easily leading to any number of problematic situations. It is necessary to have a professional on hand who can notice when something feels off and go double-check, even if the system says otherwise. This is how we ensure that decisions are made not only with precision but also with responsibility.

In short, while AI can enhance and accelerate any number of processes, it is not yet capable of fully taking the decision-making reins under its own power. Human capacity for critical thinking, empathy and moral reasoning remains a crucial element of making compliance checks run properly.

The perfect pair for navigating compliance

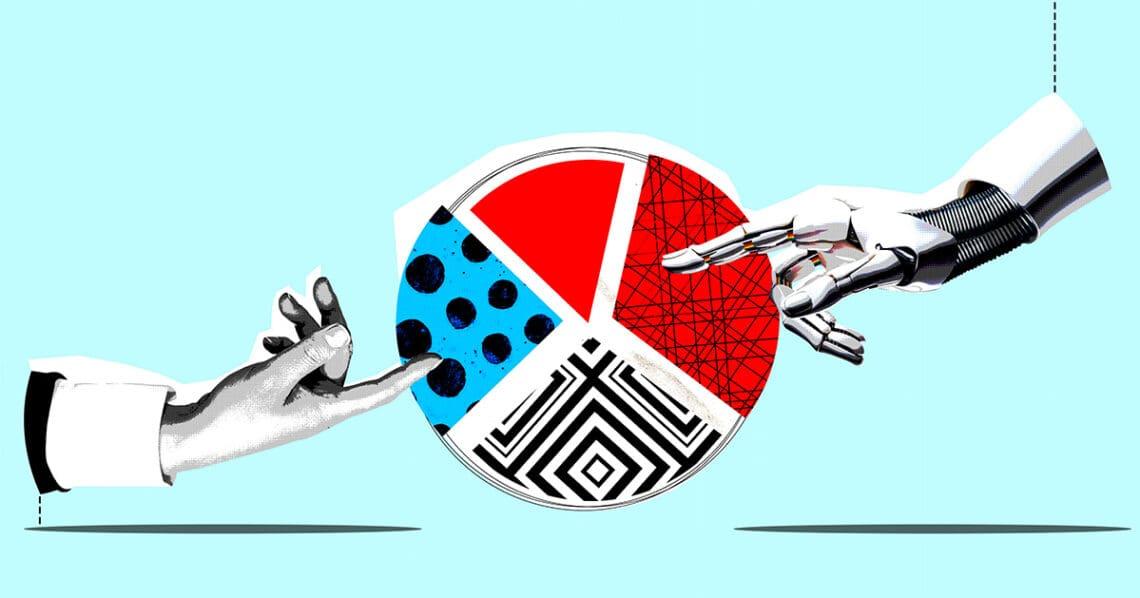

Given all of the above, it is my belief that the best way to go here is to combine the strengths of both worlds for better effect — a hybrid model that combines AI automation and the moral compass of humans.

Artificial intelligence can take care of the heavy lifting: crunching numbers, putting together the necessary data, speeding up execution of processes, etc. And then humans can step in to ask the hard questions, understand the context and make final judgement calls with a more nuanced outlook on things.

Here’s an interesting reference point: In machine learning, there is a commonly used approach called Human-in-the Loop (HITL), where humans are involved in the training process of models. While it does not directly relate to compliance, it lays the foundation for a more relevant model called Human-Over-the-Loop that’s growing increasingly popular.

In this framework, people do not just collaborate with artificial intelligence — they monitor it and make sure that all its decisions are subject to final overview from humans. In terms of compliance, it means that AI might operate independently, scanning for suspicious behavior and flagging potential fraud cases, but the call about whether something truly is suspicious rests with trained compliance officers. Humans remain the final gatekeepers, deciding what to do with the alert they’ve been given.

This kind of collaboration doesn’t just make processes faster — it makes them smarter, fairer and more adaptable. And given that compliance teams generally operate in complex, high-stakes environments, adaptability is what we need.

Conclusion

In the end, AI is not the enemy of human expertise, but it is not a replacement for it either. And it shouldn’t be treated as such. Instead, what it can and should be is an amplifier. Let it handle the grunt work: flag anomalies, sort data or generate reports. And let humans bring in-depth judgment and moral clarity to the table.

Compliance is not just about following rules — it is about knowing how to navigate gray zones, respond to uncertainty and make calls machines can’t grasp yet. It’s about doing the right thing when the right thing isn’t always obvious. Real innovation does not come from the tools themselves but from how wisely we operate them.

Roman Eloshvili is the founder of ComplyControl, a UK-based regulatory compliance provider in the finance sector.

Roman Eloshvili is the founder of ComplyControl, a UK-based regulatory compliance provider in the finance sector.