Model risk management gained traction in the risk landscape with the issuance of the Joint Supervisory Guidance on Model Risk Management in 2011: SR 11-7/OCC 2011-12. This guidance differed from the initial OCC Circular 2000-16 in that it mandated that financial institutions begin to think of model risk management as a risk similar to existing major risk areas: credit, market and operational risks. Eventually this led to the creation of a new risk function, the Chief Model Risk Officer (CMRO); a new risk policy, the Model Risk Management Policy; and a new department, Model Governance, separate from individual model validations. A virtual lexicon of risk terms came into use: model definition, model risk management policy, model inventory, model life cycle, access controls, model change controls, etc.

Model Risk Fundamentals

The definition of a model was articulated as “consisting of three components: an information input component, which delivers assumptions and data to the model; a processing component, which transforms inputs into estimates; and a reporting component, which translates the estimates into useful business information.”[1] Therefore, at its core, a model is a computational process with three components.

Model risk arises through errors in the individual components, through the way they are put together or in the way they are used. Thus, data input errors can result in errors in model outputs. Errors in model specification, either due to inappropriate conceptual design, methodology or inaccurate implementation, can also result in inaccurate model outputs. Finally, even if data quality is both reliable and sufficient, and the algorithms are accurate and properly implemented, model risk can still arise through misuse of model outputs.

Inputs and outputs are both artifacts which fall into the traditional data management area, hence the new emphasis on model risk focused attention on a key issue in many financial institutions: data. Data issues arise from data limitations, data quality issues and a lack of data governance overall. Since reliable model outputs are inextricably linked to the quality of data inputs, data quality assessment became an important part of model risk validations. In addition, since BCBS 239 became effective in January 2016, financial institutions need to think about how their data management systems comply with the 14 principles of BCBS 239.[2]

The Shot in the Arm

By itself, however, the function of model risk management might have stayed relatively obscure in the arcane intersection of risk management and quantitative finance. What shot it to prominence in the United States was the Dodd-Frank Act Stress Testing (DFAST) requirements for stressing bank regulatory capital under regulatory scenarios for all banks with assets of $10 billion or more, and the Comprehensive Capital Analysis and Reporting (CCAR) requirements for stress testing scenarios for banks with assets greater than $50 billion. CCAR banks are required to conduct stress tests using both the Fed-mandated scenarios as well as idiosyncratic scenarios designed to measure bank-specific risks.

Ironically, neither DFAST nor CCAR originally required banks to use models in performing stress tests. However, since a capital management framework needs to include credit, market, operational and liquidity risks under various scenarios, each stress test must be sufficiently tailored to capture an organization’s unique exposures, activities and risks. In practice, banks used models for estimating their regulatory capital under these scenarios. They then aggregated the risks using a calculator or macro-model to estimate a final capital number.

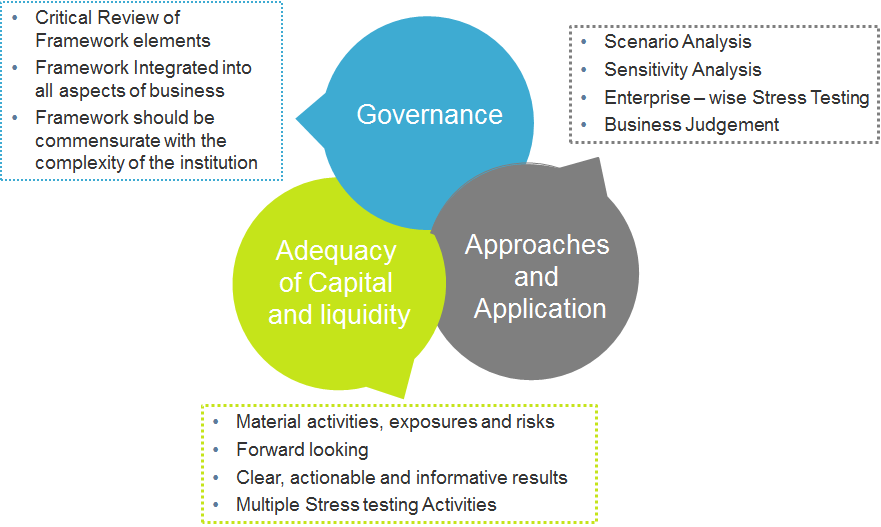

The following graphic illustrates some of the critical components of an effective capital management framework—a framework which for most banks includes heavy reliance on models for stress testing their various risks.

An Effective Capital Management Framework

Inevitably, as banks rely on models to estimate capital for DFAST and CCAR, regulators placed increasing emphasis on the governance (including validation) around the use of models.

New Guidance Issued in December, 2015

Last December, the Federal Reserve issued two new guidances: SR 15-18 Federal Reserve Supervisory Assessment of Capital Planning and Positions for USCC Firms and Large and Complex Firms, and SR 15-19 Federal Reserve Supervisory Assessment of Capital Planning and Positions for Large and Noncomplex Firms. This new guidance outlines capital planning expectations for governance, risk management, internal controls, capital policy, scenario design and projection methodologies. If the use of models had been theoretically optional in the past, it is now clear that banks cannot comply with the new guidance without robust and credible models.

The guidance, in fact, gives specific instructions on the use of models—both internally built and vendor models—and the underlying data that feed the models. If a model cannot be fully validated prior to use, a firm should conduct a conceptual soundness review of the model in question prior to use. If benchmark or challenger models are used in the capital planning process, then those models must also be validated. There must be a sound management information system to support the capital planning process, including comprehensive reconciliation and data integrity checks. If there are any gaps in these processes, these limitations must be made transparent to senior management.

Three Lines of Defense

A three-tiered approach to addressing model risk has emerged, with defined roles and responsibilities between the first, second and third lines of defense.

- The first line of defense is the model developer/owner, who is responsible for building the model to acceptable standards, consistent with academic literature and industry standard practices, and then documenting it adequately. Documentation standards have become rigorous. Expectations are that a knowledgeable person can pick up the documentation and not only understand it, but be able to run it, use it and possibly make changes to it.

- The second line of defense is generally the model risk management team within risk. A full-scope validation is expected to be conducted by a person or team qualified to assess the model, and the validator must be independent of the model developer/builder. The validator must conduct certain specific procedures, including a review of the governance surrounding the model, an assessment of the data inputs, the conceptual design and framework and tests of the implementation accuracy and calculation processes, including back-tests of the outputs or other outcome analysis to assess their reasonableness. The validation must be documented thoroughly.

- Finally, the third line of defense, generally internal audit, reviews the work of model risk management to affirm that the model risk management framework provides effective challenge, that appropriate internal and external resources were used, that processes and procedures were properly followed and that data integrity was maintained throughout.

When all three lines of defense work seamlessly to ensure that models are properly built, used and validated, executive management and the board of directors can derive comfort that model outputs used in critical business decision-making are reliable.

Why Should Management and the Board Care?

Simply stated, when models are not properly built, used or validated, banks lose money. Here are a few examples that most people will recognize:

High-Profile Financial Losses Due to Model Failures

(Sources: WSJ, FT, CNN, Bloomberg, Reuters, The Telegraph, NYTimes)

In addition, many banks who failed DFAST and CCAR in the past several years failed in the areas of governance or model risk management. Clearly, there are lessons to be learned here. And the lessons are costly.

Benefits of Using Data and Analytics Effectively

However, beyond the regulatory uses of models, and the “stick” of failing DFAST/CCAR or otherwise running afoul of regulatory expectations, management and directors should keep in mind that the proper uses of data and analytics (models) can lead to enhanced business decision-making. This is the “carrot” of effective use of models, which does not always receive sufficient focus–particularly if model risk management is viewed as a compliance exercise.

When data is timely, consistent and reliable, corporations can transform it into useful information through analytics (models), from descriptive uses to predictive analytics and, ultimately, prescriptive strategies. By leveraging their own internal data to learn more about their own risks and opportunities–for example, those of their customers (behavior, preferences, buying patterns) and their employees (propensity to stay; propensity to leave)–corporations can make better business decisions, reducing losses and enhancing profitability.

[1] www.federalreserve.gov/bankinforeg/srletters/sr1107.htm.

[2] www.bis.org/publ/bcbs268.htm and www.bis.org/bcbs/publl/d308.html.

Shaheen Dil is a Managing Director and the Global Solution Leader for Data Management and Advanced Analytics for Protiviti. She has almost 30 years of experience in all aspects of risk management and analytics--domestic and international. This includes enterprise-wide risk governance and reporting, risk modeling and model validation, assisting Internal Audit in reviewing models and data management and predictive analytics. Prior to joining Protiviti, she was an Executive Vice President at PNC Financial Services Group, where she held various leadership positions, including Head of Risk Analytics, Leader of the Basel Implementation Program, Head of Model Validation, Chief Performance Officer and Head of Credit Portfolio Management.

Before that, Shaheen worked at Mellon Bank, initially as an Economist and then as a Senior Credit Approval Officer. She was selected as one of 2011's "Top Women in Retail and Finance" by Women of Color Magazine. Shaheen was also listed by Consulting Magazine as one of 2014's Women Leaders in Consulting. Shaheen holds a Ph.D. degree from Princeton University, master's degrees from Princeton and the School of Advanced International Relations (SAIS) at Johns Hopkins University, and a B.A. from Vassar College. Shaheen has published widely on risk topics and is a frequent speaker at national conferences and industry associations.

Shaheen Dil is a Managing Director and the Global Solution Leader for Data Management and Advanced Analytics for Protiviti. She has almost 30 years of experience in all aspects of risk management and analytics--domestic and international. This includes enterprise-wide risk governance and reporting, risk modeling and model validation, assisting Internal Audit in reviewing models and data management and predictive analytics. Prior to joining Protiviti, she was an Executive Vice President at PNC Financial Services Group, where she held various leadership positions, including Head of Risk Analytics, Leader of the Basel Implementation Program, Head of Model Validation, Chief Performance Officer and Head of Credit Portfolio Management.

Before that, Shaheen worked at Mellon Bank, initially as an Economist and then as a Senior Credit Approval Officer. She was selected as one of 2011's "Top Women in Retail and Finance" by Women of Color Magazine. Shaheen was also listed by Consulting Magazine as one of 2014's Women Leaders in Consulting. Shaheen holds a Ph.D. degree from Princeton University, master's degrees from Princeton and the School of Advanced International Relations (SAIS) at Johns Hopkins University, and a B.A. from Vassar College. Shaheen has published widely on risk topics and is a frequent speaker at national conferences and industry associations.